AI systems generate metrics constantly. Every interaction, evaluation, and deployment produces numbers that promise clarity but often deliver the opposite. Accuracy scores, performance indicators, and evaluation outputs accumulate quickly, and before long, teams find themselves surrounded by data without a clear sense of direction. The problem isn't a lack of information. It's the difficulty of understanding which AI metrics matter right now.

In day-to-day work, evaluating AI performance is rarely straightforward. Even when using structured evaluation tools, raw outputs don't always explain what should change, where an AI agent needs adjustment, or which signals deserve immediate attention. Metrics exist, but they're fragmented across dashboards, logs, and reports that teams don't check often enough.

As a result, important insights arrive late or not at all. This is where the gap between metrics and decisions becomes clear. Most decisions about AI systems don't happen inside analytics tools. They happen in conversations, during quick discussions, and in moments where teams decide whether to act or move on. More often than not, those moments happen in Slack.

When AI metrics live somewhere else, they struggle to influence real outcomes. Adding more dashboards or collecting more data doesn't solve this problem. What teams actually need is prioritization. Metrics only become useful when they are contextual, timely, and visible at the exact moment a decision is being made. Without that context, even the most accurate evaluation results fade into background noise.

The Problem with AI Metrics in Daily Work

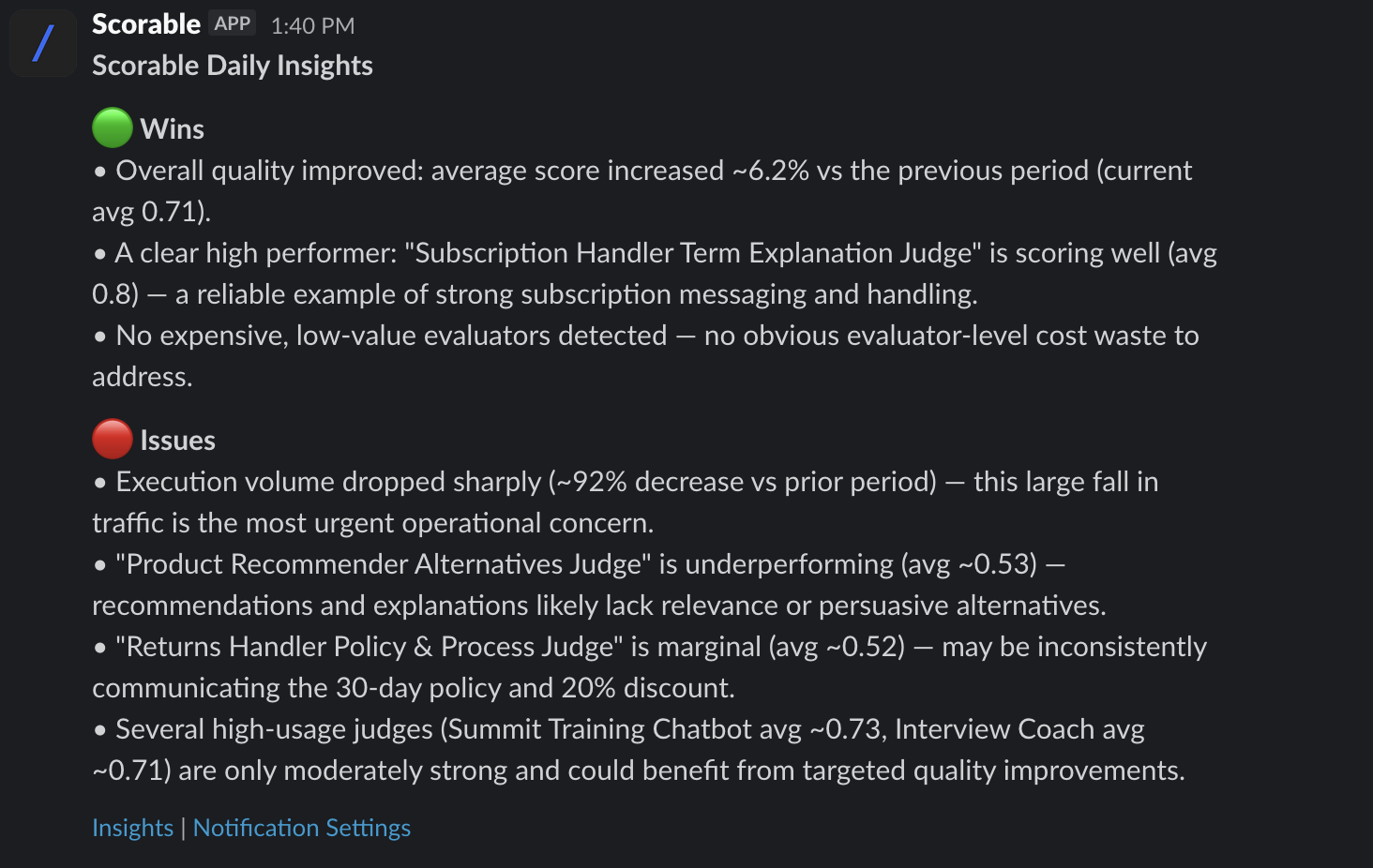

This is the thinking behind the Scorable Slack app. By connecting Scorable to Slack, evaluation metrics are delivered directly into the space where teams already collaborate. Instead of pulling people toward yet another interface, insights meet them where they are already working.

When evaluation results appear in Slack, they gain immediate relevance. Teams can see how their application behaves in real-world scenarios, recognize what's working, notice what's drifting, and understand where an AI agent needs fine-tuning. Metrics stop being abstract numbers and start becoming shared signals that guide action.

Over time, this shift changes how teams interact with AI evaluation data. The focus moves away from scanning dashboards and toward responding to meaningful signals. Iteration becomes faster, decisions become more confident, and the friction between evaluation and action begins to disappear.

Bringing AI Metrics Where Decisions Actually Happen

The goal is to be helpful in the places where teams already work, without disrupting existing workflows.

In the end, the challenge with AI metrics has never been about quantity. It has always been about relevance. Teams don't need more numbers. They need to know which ones matter today and they need to see them at the moment decisions are made.

That's when metrics finally start doing their job.